SICP 1.2.3 - Orders of Growth

Covering SICP section 1.2.3 and exercises 1.14 and 1.15.

Table of Contents

Covering SICP section 1.2.3 and exercises 1.14 and 1.15.

Previously, I was thinking of the chunk of SICP material I was addressing at a given time as the subchapters - as in 1.x. I think I need to break things down more and think about a sub-sub chapter as the unit of material I'm trying to address. So I'll name and structure my posts accordingly from now on.

Other Materials Consulted

L07 Orders of Growth | UC Berkeley CS 61A, Spring 2010

Chapter 3 Analysis of algorithms

Notes

Processes can use computational resources at very different rates, as we've already seen. The two constraints we've been looking at are time (in terms of computational steps) and space (in terms of computational memory). We describe orders of growth using $\Theta(n)$ notation, and it seems like we do so in terms of a particular resource. So if we are being precise, we don't say a function has $\Theta(n)$ order of growth as a blanket statement, but instead that it has $\Theta(n)$ order of growth for some particular resource that we're talking about. So this means that when we're analyzing procedures, we'll often be talking about two orders of growth - one for time/number of computational steps, and one for space/memory - and they might be different.

Orders of growth analysis focuses on where the procedure winds up in terms of complexity when given a harder/more complex case of whatever the function is trying to compute. So you figure out the resource use of the procedure in terms of some n and some resource and you state that resource use in terms of math. To figure out the order of growth you look at the leading term and coefficients drop away. $n^2$ will ultimately beat $n$ as n goes up. Applying the basic idea that only the n of the leading term exclusive of the coefficient really matters for this kind of analysis, 2n, 100n and $n + 1$ are all treated as the same order of growth $\Theta (n)$.

It's possible that a procedure with a worse order of growth with regards to some resource will do better than a procedure with a better order of growth for that same resource if you give the two procedures smaller/easier problems. This page gives an example of an Algorithm A that takes $100n + 1$ steps to solve a problem and an Algorithm B that takes $n^2 + n + 1$ steps.

Here's a table from this page representing these two Algorithms:

| Input | Run time of | Run time of |

| size | Algorithm A | Algorithm B |

| 10 | 1 001 | 111 |

| 100 | 10 001 | 10 101 |

| 1 000 | 100 001 | 1 001 001 |

| 10 000 | 1 000 001 | > 1010 |

Here's a table from this page representing some common orders of growth

| Order of | Name |

| growth | |

| O(1) | constant |

| O(logb n) | logarithmic (for any b) |

| O(n) | linear |

| O(n logb n) | “en log en” |

| O(n2) | quadratic |

| O(n3) | cubic |

| O(cn) | exponential (for any c) |

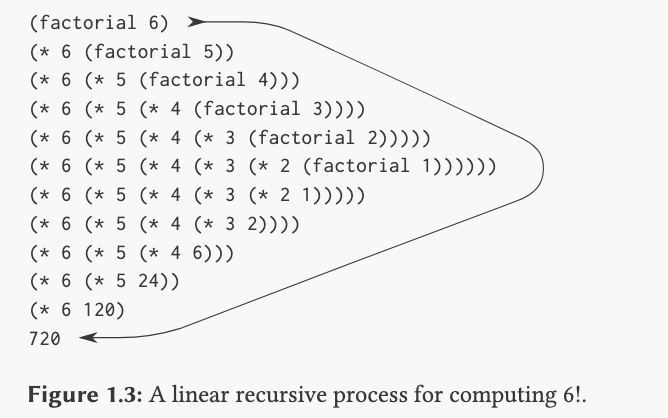

The linear recursive factorial function we used earlier is worth analyzing in terms of orders of growth.

The bigger the n, the more computational steps (ultimately reducing to multiplications) it's going to perform. The number of steps increases linearly - if you give factorial an n of 6 then it's going to have to do an extra multiplication compared to if n were 5. The space also increases linearly, and I'm not sure how crystal clear this distinction was in my mind previously, but it's worth reflecting on some. Because of how the procedure functions, the interpreter winds up having to keep track of a lot deferred calculations that it's waiting to perform until it has all the necessary arguments. It can't evaluate (* 6 (factorial 5)) until it figures out the value of factorial 5), and to do that it needs to evaluate factorial 4, and so on. And the space it needs increases linearly as n increases - increasing n by 1 means one more deferred operation to keep track of. So it happens to be the case that with the linear recursive factorial function, both the computational steps required grows as $\Theta(n)$ and the space required grows as $\Theta(n)$.

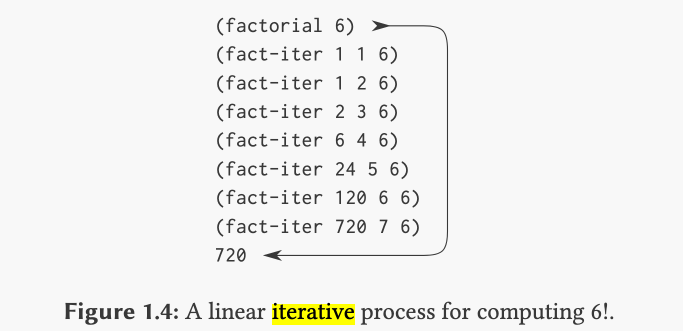

For the iterative factorial function...

...the computational steps grows as $\Theta(n)$ but the space required is constant. We describe that as $\Theta(1)$.

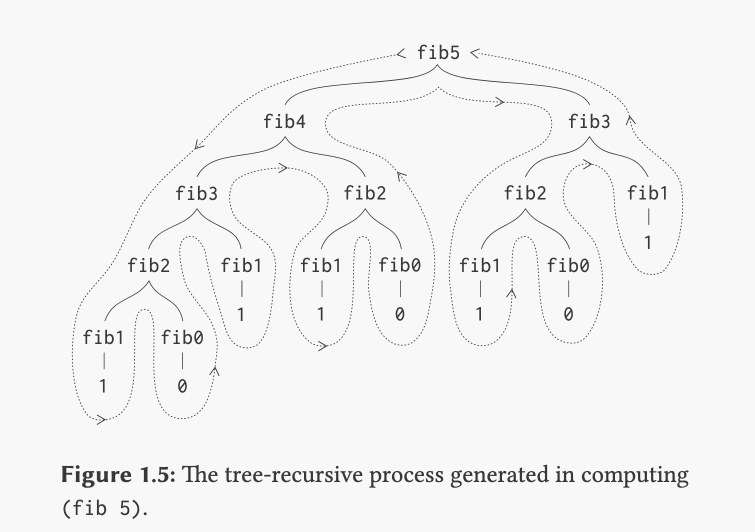

For the tree-recursive Fibonacci procedure...

the book says:

The tree-recursive Fibonacci computation requires $\Theta(\phi^n)$ steps and space $\Theta(n)$, where $\phi$ is the golden ratio described in 1.2.2.

And earlier in the book they said of the tree-recursive Fibonacci procedure:

Thus, the process uses a number of steps that grows exponentially with the input. On the other hand, the space required grows only linearly with the input, because we need keep track only of which nodes are above us in the tree at any point in the computation. In general, the number of steps required by a tree-recursive process will be proportional to the number of nodes in the tree, while the space required will be proportional to the maximum depth of the tree.

(emphasis added)

I think I follow re: computational steps. I'm not 100% sure what they mean by "because we need keep track only of which nodes are above us in the tree at any point in the computation."

I tried representing the evaluation process for (fib 5) in a Scheme window:

(fib 5)

(+ (fib 4)(fib 3))

(+ (+ (fib 3)(fib 2))

(+ (fib 2)(fib 1)))

(+ (+ (+ (fib 2)(fib 1))(+ (fib 1)(fib 0))

(+ (+ (fib 1)(fib 0)(fib 1)))))

(+ (+ (+ (+ (fib 1)(fib 0)(fib 1))(+ (fib 1)(fib 0))

(+ (+ (fib 1)(fib 0)(fib 1))))))

There's something still not clicking here though. I wouldn't be able to explain this to someone else.

Exercises

✅❌ Exercise 1.14

(I figured out only part of the answer)

Exercise 1.14: Draw the tree illustrating the process generated by the

count-changeprocedure of 1.2.2 in making change for 11 cents. What are the orders of growth of the space and number of steps used by this process as the amount to be changed increases?

I tried making some trees for count-change when invoked with the values 11 and 12.

I also made some graphs that tried to show the number of computational steps involved starting around here

https://discuss.criticalfallibilism.com/t/justin-does-sicp/96/81?u=justinceo

I also watched videos like this:

This was all helpful but I couldn't get quite to full confidence on how to analyze the orders of growth rigorously, at least for the computational steps.

My intuition there is that some sort of exponential growth of steps is happening, so it should take exponential time.

OTOH, re: the memory/space, that seems to grow linearly (that would be $\Theta(n)$).

I was correct on the memory/space usage. One way to think about the issue here is that the maximum depth of the tree is going to be determined by the depth of the series of recursive calls that occur when counting down with the smallest denomination (i.e. pennies), and that depth is going to increase by 1 when the n is increased by 1.

And while I was correct that the growth re: the time/steps was some sort of exponential growth, that's a very imprecise answer and way more precision is possible.

For understanding this problem I found the explanation here by Drew Hess and the graphs here by Sébastien Gignoux particular helpful.

Quotes from Hess link unless otherwise noted.

The count for

(count-change 11)is 55. The graph for this evaluation isn't shown here, but if you draw it on paper, you can see that it generates a tree recursive process.Each time procedure cc is called, it returns 1, 0 or the sum of two subtrees. Let's say, then, that each invocation of

cc, that is, each node in the evaluation tree, performs one operation.

Makes sense so far.

For amount n and kinds-of-coins 5, the number of operations performed by procedure

ccis 1 plus the number of operations performed by the subtree generated by(cc n 4), plus the number of operations performed by the subtree generated by(cc (- n 50) 5). Let's use $N(n,m)$ to denote the number of operations performed by a subtree(cc n m). Then we have:$N(n,5) = 1 + N(n,4) + N(n − 50,5)$

This is just representing the number of operations performed within $N(n, 5)$ as being the sum of the recursive calls in the else clause of count-change.

Let's expand the first N term by repeating this process for (cc n 4):

$N(n,4) = 1 + N(n,3) + N(n − 25,4)$

Keep expanding the first N term of each subtree:

$N(n,3) = 1 + N(n,2) + N(n − 10,3)$

$N(n,2) = 1 + N(n,1) + N(n − 5,2)$

$N(n,1) = 1 + N(n,0) + N(n − 1,1)$

(cc n 0)is a termination case: it performs only a single operation (returns 0):$N(n,0) = 1$

$N(n,1) = 1 + 1 + N(n − 1,1)$

Something that might be a little confusing (I was confused, anyways!) - $N(n,0)$ returns 0, but for the purposes of the analysis we are doing here, it still counts as a computational step, which is why Hess says N(n,0) = 1.

So for $N(n,1) = 1 + 1 + N(n − 1,1)$, it has the computational step for its "node" as the first 1, and then since we hit a base case for $N(n,0)$ we now know that's 1, so that's the second 1. So then we just need to figure out the $N(n − 1,1)$.

Let's now consider the other subtree of

(cc n 1),(cc (- n 1) 1):$N(n − 1,1) = 1 + N(n − 1,0) + N(n − 2,1)$

Once again,

(cc (- n 1) 0)is a termination case and performs only a single operation. This process continues until theamountargument ofccis(- n n), at which point we've fully evaluated the(cc n 1)subtree:$N(n − n,1) = 1$

$N(n − n,1) = 1$

Working our way back up:

$N(n − (n − 1),1) = 1 + 1 + 1 = 3$

$N(n − (n − 2),1) = 1 + 1 + N(n − (n − 1),1) = 1 + 1 + 3 = 5$

$N(n − (n − 3),1) = 1 + 1 + N(n − (n − 2),1) = 1 + 1 + 5 = 7$

$N(n − (n − 4),1) = 1 + 1 + N(n − (n − 3),1) = 1 + 1 + 7 = 9$

It's easy to see that this process continues until we're back to (cc n 1):

$N(n,1) = 1 + 1 + N(n − 1,1) = 2n + 1$

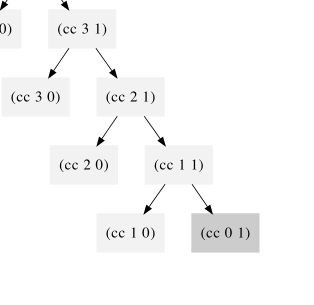

We can see an example of the pattern described above in this snippet of Gignoux's tree:

The number of computational steps for(cc 3 1) consists of 1 (for the initial invocation of cc 3 1) + $N(3,0) + N(2, 1)$ computational steps, or, after we evaluate cc 3 0, $1 + 1 + N(2, 1)$ computational steps. And you keep evaluating the adding up steps until you get to the base case. If you do this, you get 2(3) + 1 or 7 steps, which is consistent with our $2n + 1$ value from above.

We can verify this by drawing the graph for

(cc 11 1)and counting the number of nodes. Note that the amount of storage required for an applicative-order evaluation of the(cc n 1)subtree is also proportional to $2n + 1$, since the + operations are deferred until the value of each subtree is calculated.

I'm not sure if I quite follow the bit about the amount of storage required being proportional to $2n + 1$,. The number of computational steps being $2n + 1$ makes sense but it seems like the storage required would be more like $n$ or $n + 1$ or something like that if the amount of storage required is based on the depth of the number of deferred steps. It's possible I'm not understanding what exactly the author means by "proportional".

When we're dealing with θ-notation orders of growth, we can ignore constant factors, so it's sufficient to say that evaluating

(cc n 1)requires order n number of steps and order n storage, since $2n + 1$ is linear in n. Dropping these constant terms makes it easier to calculate orders of growth for the other terms in the process tree.

Makes sense.

(count-change n)generates a tree-recursive process. We know from the text that tree-recursive processes require space proportional to the depth of the tree, and we know that(cc n 1)generates the deepest subtree of the process (since other subtrees reduce the size of n), so we can say at this point that count-change requires space of order n (i.e., linear).

This discussion of the space requirements seems fine to me.

Let's go back to the root of the subtree which generated :

N(n,2) = 1 + N(n,1) + N(n − 5,2)

We know the value of the first N term. The 2nd N term is:

N(n − 5,2) = 1 + N(n − 5,1) + N(n − 10,2)

This expansion continues until n is <= 0, or roughly n/5 times, since each expansion of the 2nd term subtracts 5 from its argument. Each one of these expansions produces an N(a 1) term, where a is the amount, and each of those terms produces 2a + 1 operations.

You can see the phenomena described in the last sentence of the above quote in visual form in one of Gignoux's charts:

The green nodes represent the initial value being reduced by 5, and the nodes underneath each green node represent the $2n + 1$ operations produced by each of those green nodes.

Subtracting a constant from n at each step doesn't change the fact that each step is a linear (order n) process, so we can ignore the subtraction when calculating the order of growth. n times n/5 steps is $\frac{n^2}{5}$ steps. This term overwhelms the 2n + 1 steps we calculated for the previous branch, so we can ignore the 2n + 1 term now and say that calculating

(cc n 2)has order $\frac{n^2}{5}$ steps.

Shouldn't we say the order is $n^2$ steps? I thought that for order of growth analysis we didn't care about constants, and that would seem to go as well for division by constants as for multiplication by constants.

Now we consider the next node up the tree, the one which generated

(cc n 2):$N(n,3) = 1 + N(n,2) + N(n − 10,3)$

The analysis for this step is similar to the one we performed for $N(n,2)$: calculating

(cc (- n 10) 3)produces roughly $n/10$ more nodes, each of which evaluates(cc x 2). We know that evaluating (cc x 2) is order x2 / 5, so(cc n 3)is roughly $n/10$ times that, $n^3 / 50$. Again, the $n^3$ term dominates the growth of the process, so we can ignore the lesser orders of growth of the other nodes we've considered and say that(cc n 3)has order $n3 / 50$ growth.Calculating N(n,4) and N(n,5) follows similar logic. It's then straightforward to show that the number of steps required to evaluate

(cc n 5)is $θ(n5)$.

I think I basically follow except for the aspect about retaining the division by a constant in the denominator, and I already complained about that above.

Gignoux has a more rigorous and thoroughly mathy approach which I also liked. He uses sigma notation, which I read up on in order to understand his solution. It was less intimidating/complex than I imagined it to be once I actually started reading. Anyways, let's look at Gignoux's approach, taking as a given that $T(n, 1) = 2n + 1$ (since we've already seen that that is true), and looking at $T(n, 2)$.

So we know that we're going to have $n/5$ + 1 times that we can subtract a nickel from an amount before it reaches 0 or a negative value. And we know that each one of those calls (except the final one) is going to produce 2n + 1 calls themselves. So in order to figure out the total number of calls for (cc n 2), we want to add up $n/5$ + 1 + the sum of the number of calls made by the cc n 1 calls that result from the (cc n 2) calls.

$T(n,2);=\frac n5+1+;\sum\limits_{i=0}^{n/5}T(n-5i,1) $

I believe the next step is that we take $n - 5i$ and plug that as our $n$ into $2n + 1$. I started to lose the plot of the steps a bit here. I may come back to it later.

Gignoux also made great trees/graphs. I strongly suggest looking at them if you're interested in this problem.

✅🤔 Exercise 1.15

(seem to have arrived at the right conclusion but not sure if all my reasoning is sound)

The sine of an angle (specified in radians) can be computed by making use of the approximation $\sin x \approx x$ if x is sufficiently small, and the trigonometric identity

$\sin x = 3\sin{\frac{x}{3}} - 4\sin^3{\frac{x}{3}}$

to reduce the size of the argument of sin. (For purposes of this exercise an angle is considered “sufficiently small” if its magnitude is not greater than 0.1 radians.) These ideas are incorporated in the following procedures:

(define (cube x) (* x x x)) (define (p x) (- (* 3 x) (* 4 (cube x)))) (define (sine angle) (if (not (> (abs angle) 0.1)) angle (p (sine (/ angle 3.0)))))

- How many times is the procedure

papplied when(sine 12.15)is evaluated?

The way sine works is that when the absolute value of angle is not above 0.1, it returns angle. Otherwise, it invokes p with a recursive call to sine but with the angle having been divided by 3. So I think what will happen is that the procedure will keep building up a deferred chain of p's until the angle is less than 0.1. Starting from 12.15 and dividing by 3 takes 5 steps to get to a value below 0.1, so my initial guess is that the procedure p will be applied 5 times.

I tested this guess in DrRacket and got results consistent with it.

- What is the order of growth in space and number of steps (as a function of

a) used by the process generated by thesineprocedure when(sine a)is evaluated?

(Note that I referred to n instead of a below)

My guess is that both space and number of steps would be $\Theta(\log_{3}n$). The key factor that determines the number of computational steps is sine's division by 3. As an experiment, let's consider the result if (if (not (> (abs angle) 0.1)) were to be (if (not (> (abs angle) 1)) instead.

In that case, in addition to the initial invocation of sine, we get 3 more. We also get 3 invocations of p and 3 invocations of cube. $\log_{3}(27) = 3$. So we get $(3 \cdot \log_{3}(27)) + 1$ steps, or 10 total steps.

With this hypothetical alternate program, the results for (sine 81) are similar. $\log_{3}(81) = 4$. We get $(3 \cdot \log_{3}(81)) + 1$ steps, or 13 steps total.

So for this alternate, rewritten version of the program, the general form for expressing the number of steps is $(3 \cdot \log_{3}(n)) + 1$.

The fact that the actual version of the program uses 0.1 instead of 1.0 does not change anything fundamental. I think it basically just adds another three steps onto the end (the additional divisions required to go from a number near 1 to 0.1).

I tested out some values:

sine 0 = 1 sine call

sine 1 = 4 sine + 3p calls + 3 cube calls (10 total)

sine 3 = 5 sine + 4 p calls + 4 cube calls (13 total)

sine 9 = 6 sine + 5 p calls + 5 cube calls (16 total)

sine 27 = 7 sine + 6 p calls+ 6 cube calls (19 total)

So the pattern from sine 3 on is $(3 \cdot \log_{3}(n)) + 10$ calls, which seems clearly to be $\Theta(\log_{3}n)$ in terms of the number of steps.

As for space, I guess that'd be determined by the depth of the chain of recursive calls of sine and p you build up before hitting sine's base case. So something like $(2 \cdot \log_{3}n) + 1$ space? So basically $\Theta(\log_{3}n)$ as well.

Unanswered Questions

- What's the exact meaning of the bit about "because we need keep track only of which nodes are above us in the tree at any point in the computation."?