Intellectual Honesty, Part 2

Part 2 of comments on the eleventh chapter in Leonard Peikoff’s book "Understanding Objectivism", "Intellectual Honesty".

Disclaimer: I’m not an expert on philosophy. I’m just a person trying to figure things out for myself, and speak for no one but myself.

This post is part of a series of posts about Lecture Eleven, “Intellectual Honesty”, in Leonard Peikoff’s book Understanding Objectivism.

In the last post I considered Peikoff’s definition of dishonesty and his claims that some ideas are inherently dishonest. Peikoff had started to move to the issue of considering a person’s context as part of judging them to be dishonest.

One thing that Peikoff says is relevant is the time a person lives in. He uses the example of Andrei in We the Living, and says that a person who thought Russia was a noble experiment and that the period of grief would last a short time was conceivable at the time of the Russian Revolution, at least in a fictional context. But someone today (he’s speaking in 1983) could not take the view that the problems are just temporary and the concentration camps don’t mean anything.

I agree with what Peikoff is saying here, and thinking about this, I find myself returning to a point in the last post where I had some doubts. Peikoff thinks that certain viewpoints are inherently dishonest, and one example he used is being a modern advocate of Nazism or communism. I was skeptical. One thing about the idea of an inherently dishonest viewpoint that troubles me is that it seems to say a viewpoint is dishonest regardless of the context in which a viewpoint exists. But there is always a context in which a viewpoint exists. I think holding even rather extreme, ridiculous views would be understandable if, say, one were raised by parents who made a great effort to encourage those views and construct a presentation of reality where information/arguments/evidence contradicting those views were not presented. In that case, the child could honestly hold views that I think Peikoff would describe as inherently dishonest. That’s an extreme case, though. Given a more standard sort of cultural context, where one wasn’t raised by weird Nazis or whatever, I think you could say with reasonable certainty that holding certain views is an indicator of dishonesty. But I think part of what I was struggling with in my doubts about Peikoff’s inherently dishonest viewpoint categories is that I felt like I needed more context to come to a judgment — I needed to rule out some extreme context like being raised by weird Nazis or nihilists or whatever.

Peikoff says that context varies with a person’s profession and the knowledge they have from that profession:

There’s a big difference here, for instance, between the man on the street who reads in some tabloid that the world is coming to an end because of the pollution and ecology, as against some left-wing scientist who comes out with doomsday scenarios in defiance of all known facts and of all scientific method, in order to foster his socialist conclusions. The scientists who do this are prima faciemuch more dishonest than the individual who reads the Enquirer. Or, as another example of this point, the man on the street who simply says, “There are no absolutes,” and doesn’t have the faintest idea of the philosophic implications, is in a very different position from the philosopher who knows what this means, and who knows that it’s a complete assault, by implication, on reason and reality.

I agree with this. I think there is a theme that connects stuff like the relevance of profession, historical era, the person’s age (mentioned in the last post), and so on in judging dishonesty. The theme is captured by this question: given the person’s context, how much knowledge do they have to ignore to advocate for the position they’re advocating for? The more they have to ignore, the more dishonest they are. I think that this connects to Elliot Temple’s definition of lying. Temple says that “A lie is a communication (or a belief, for lying to yourself) which you should know is false.” The state of your knowledge on some topic — your context — is what determines the set of things that you should know. So if you have some relevant knowledge on a topic, and ignore it, you are being dishonest. And your dishonesty is greater to the extent you need to ignore more knowledge.

Peikoff also says intelligence is important in judging context.

Peikoff says that one way to figure out whether someone else is being honest is to ask yourself if you yourself could have once honestly believed something. That seems like an ok way to avoid being super-dogmatic/judgmental in a rationalistic way, which is one of the things Peikoff is trying to focus on. It could be problematic, though. For one thing, if you’re super harsh to yourself regarding your honesty and moral judgments, you might still be super harsh towards other people. OTOH, if you’re too lenient towards yourself regarding assessing your honesty, you might extend that same leniency towards others. So I think this approach only works well if you’re pretty reasonable in judging your own honesty.

Peikoff gives a caveat about this approach. He says that the fact that someone believes something wrong that you yourself agreed with doesn’t prove he’s honest, because he might have a context where he should know that the thing he believes is wrong. So you still have to take the other person’s context into account. He also says that something can be clear to you and confusing to someone else and that doesn’t mean they’re dishonest - it depends on their context. I agree with all this.

Peikoff says the manner in which someone argues — whether they use ad hominem attacks, are sarcastic, etc. — versus whether they listen and try to respond to your points is a good indicator of honesty versus dishonesty. I agree. Peikoff also says that someone can get emotional/feel attacked and be mean in a discussion, but then apologize later, and that would indicate he’s not dishonest, if it’s a one time thing and not a pattern. Peikoff also says someone (like a professor with evil Kantian views) can be very practiced with their dishonesty and remain super calm and seem reasonable, so again, manner of arguing isn’t a perfect indicator. You have to look at the whole picture. I agree with these points.

Peikoff formulates the issue of judging context this way:

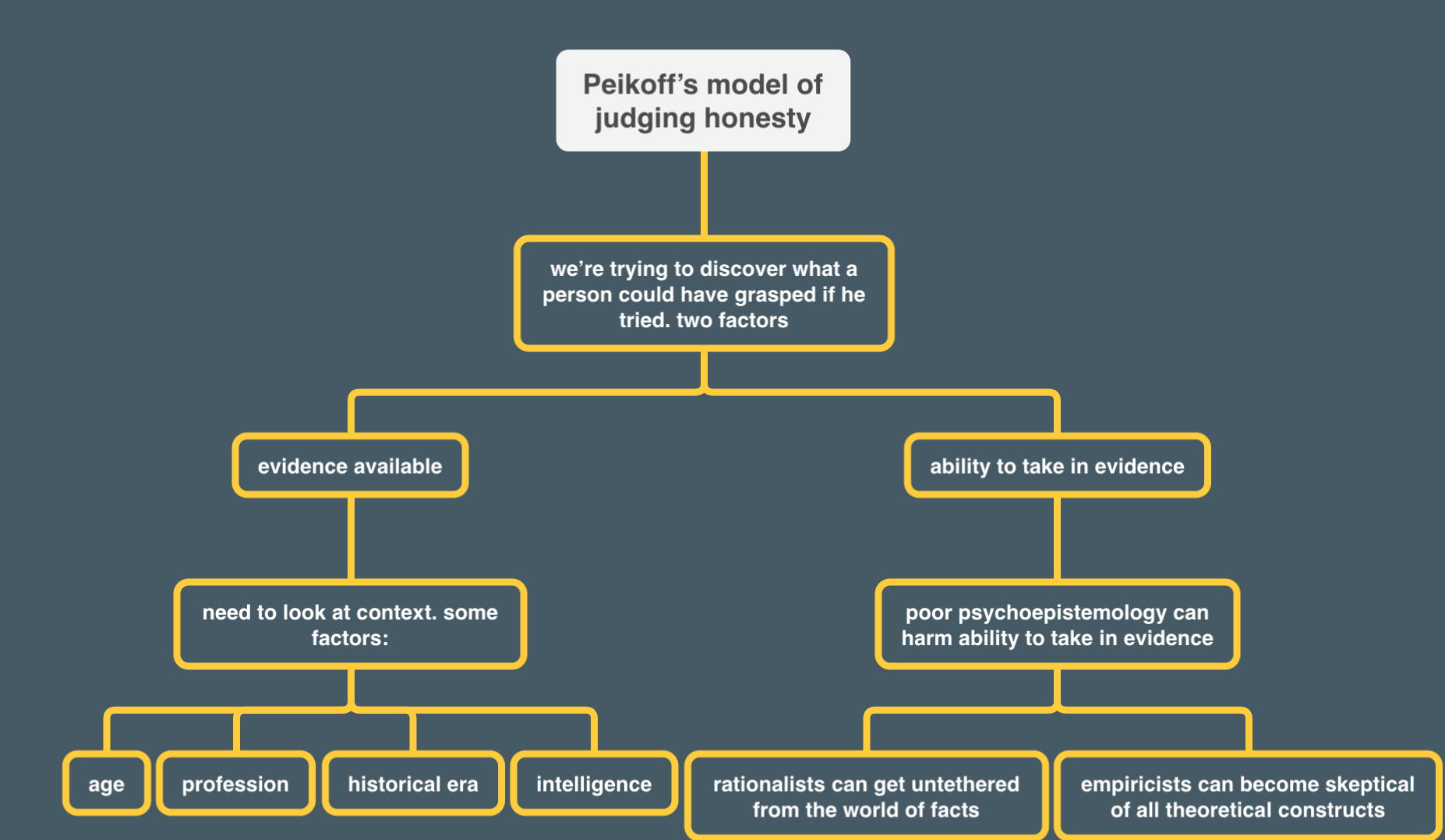

When we judge a person’s context, we’re trying to discover what he could have grasped if he tried.

Peikoff says honesty involves two factors: the evidence available to the person and the ability of their consciousness to take in the evidence. On the second point, Peikoff says that automatized thinking processes — what Rand called psychoepistemology — are relevant. And if your psychoepistemology is very poor, you can be effectively deprived of evidence. As an example, Peikoff says that rationalists can become oblivious to factual data due to errors in their automatized methodology. Peikoff says an advocate of communism with a small “c” who believes that property should be owned in common could rationalistically project a socialist utopia of peaceful cooperation, and says that real-life examples are irrelevant to such a communist’s ideal. I guess the distinction Peikoff has in mind between this case and the inherently dishonest communists he discussed earlier is that in this case, we have some utopian living in castles in the sky, whereas in the other case we had some more overt statements of evil. I wonder about Peikoff’s concession that this “small ‘c’” communist is innocent, though. It would depend a lot on context. But let’s imagine an adult in the modern age with access to a library and the internet. If he hasn’t engaged with criticisms of communism and thought through how they might apply to his model, and if he hasn’t carefully thought about and explained to himself why real life cases aren’t counterexamples to his ideal, wouldn’t there be some dishonesty in failing to do that while still being a strong advocate of some view? It’s similar to the case I discussed here in a dialog where I considered someone who holds themselves out as an expert in some field and hasn’t addressed a refutation of some theory that the expert advocates while still advocating that theory. If you advocate something, there’s an implication — or hell, there should be an implication, in anything resembling a rational society — that you’ve thought about that thing to some degree, given the matter some attention, and come to some sort of considered judgment on the point. I think that in fact, people often just attach themselves to ideas without giving them any thought or considering alternatives, but that’s bad. But in any case, if you advocate something, you should have given it some thought; you have a responsibility to have given the matter some mental attention in an objective, unbiased manner. The amount of mental attention you’re obligated to give it depends on the importance of the matter for which you’re serving as an advocate. If you’re advocating that a friend watch a television show, the attention you give might be fairly slight. If you’re advocating wholesale changes to how society functions, as in communism, you have a responsibility to have given the matter a great deal of attention. And so if you fail in that responsibility while continuing to be an advocate, I think there is some dishonesty there, at least for a person in a standard context (so, excluding the case of child who is a red diaper baby, for example).

Peikoff next considers the example of an empiricist who is so distrustful of abstractions that he refuses to accept a theoretical conclusion because he’s suspicious of theoretical conclusions as such. E.g. he refuses to concede that he agrees with capitalism even after you address all his objections, because he doesn’t go for agreeing with theoretical systems. Peikoff thinks that both the rationalist and empiricist cases are fixable if someone is young but could be entrenched if you’re old. He’s pretty bleak about the result:

In such a case, I would say the person is not necessarily dishonest, but for practical purposes, it comes out to be the same as if he were, because it has the same result: You can no longer deal with the person.

Peikoff thinks that an extremely entrenched rationalist or empiricist might not be dishonest but basically has to be dealt with the same way as a dishonest person. He basically thinks this stuff can get so extreme that it can be like a mental illness, and compares it to being psychotic. He makes clear, though, that he doesn’t think being e.g. a super rationalist is actually like being a psychotic, and he thinks that at a certain point even a super rationalist has to admit that something is wrong with their viewpoint. He gives the example of a rationalistic apologist for the Soviet system:

Even his rationalism cannot literally blind him to the butchery of so many millions of people. So there you have to say if he is not psychotic, it doesn’t make any difference if he’s rationalist or what; he is dishonest.

What I find that apologists for communism do is use scapegoats. Like they’ll concede that people died but blame sanctions or saboteurs or stuff like that. So they have some sort of explanation for what’s going on, however dubious. I don’t think they have a great answer for why communist countries, which are supposed to be the implementers of a superior economic and social system that will lead to a superior sort of human being, seem so fragile and vulnerable to these alleged sanctions and sabotage. Anyways I think the rationalists Peikoff describes can be quite skilled at creating rationalizations for certain aspects of their system. And if something is really bad they’ll deny it or say it’s super exaggerated by Western propaganda. People are quite good at literally blinding themselves. I’m not sure I fully agree with Peikoff here though. Like, I have a suspicion that maybe people can blind themselves to butchery, but they are still dishonest, but their dishonesty is at some sort of higher or meta level. Like, people engage in very biased and one-sided rationalizing to defend some particular viewpoint or system. If they were more honest, they could introspect and see that they’re bending over backwards to try to “save” one theory in ways they wouldn’t do for others, and they could recognize this as bias. But they don’t. So maybe that’s where the dishonesty is? This is half-baked and just a thought.